AlexNet Paper Note

ImageNet Classification with Deep Convolutional Neural Networks(AlexNet)

Published Year : 2012

Paper URL

Note:這是關於讀paper時所做的筆記以及insight,之後應該會每週更個兩三篇吧,一開始先來一些經典的paper。

What

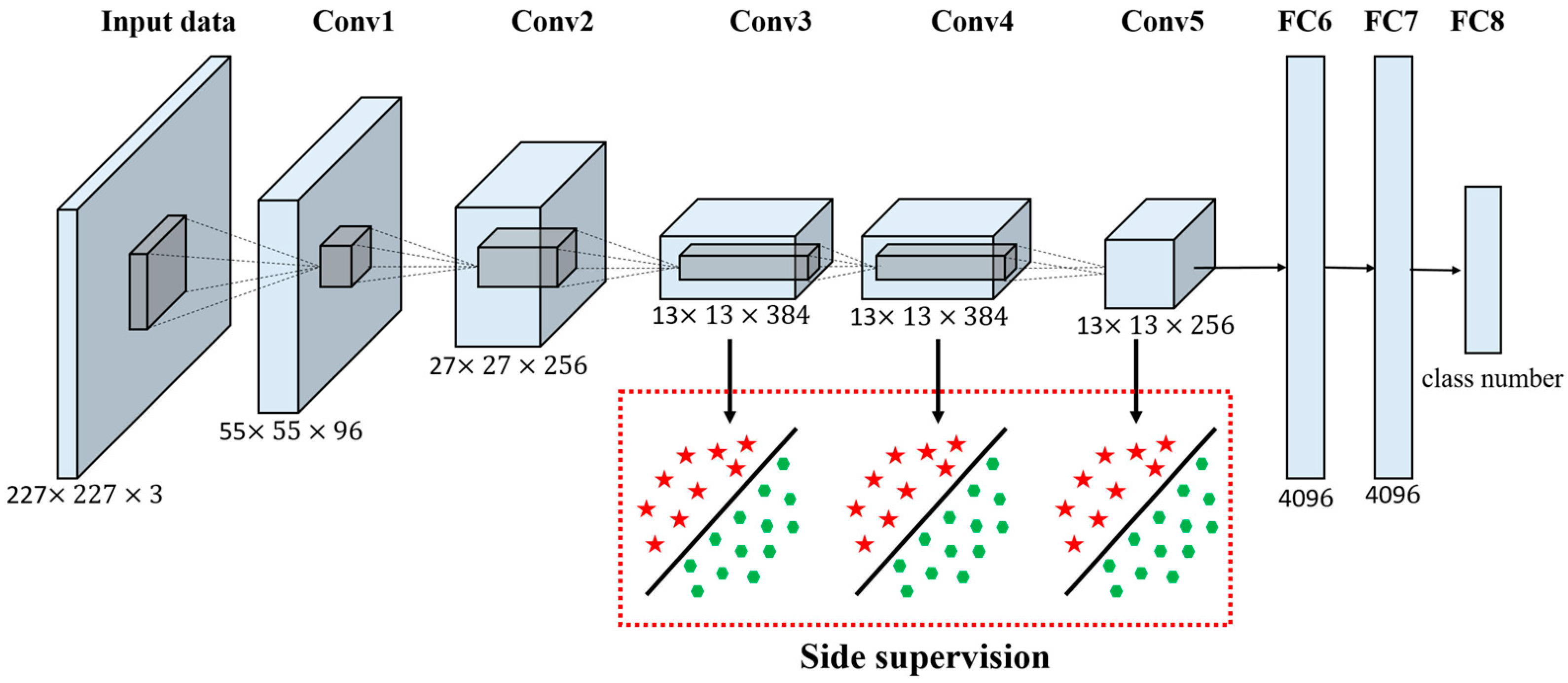

- Use large deep convolutional neural network to classify 1.2 million images and achieved top-1 and top-5 error rates of 37.5% and 17.0% which is considerably better than the previous state of art(Sparse coding methods).

Why

- To achive higher accurate of image classification.

- To solve the over fitting problem of fully-connected layer

- To train faster of large deep neural network

How

- This structure cost a lot of computational ability which makes training time slow and overfitting. Thus the writter give some way to solve it.

- ReLU Nonlinearity

- Choose ReLU as activation function to train six times faster faster than tanh

Multiple GPUs

- Use two GPUs to train faster

Local Response Normalization

- Denoting by {% _internal_math_placeholder 2 %} the activity of a neuron computed by applying kernel {% _internal_math_placeholder 3 %} at position {% _internal_math_placeholder 4 %} and then applying the ReLU nonlinearity, the response-normalized activity {% _internal_math_placeholder 5 %} is given by the expresion above where the sum runs over {% _internal_math_placeholder 6 %} "adjacent" kernel maps at the same spatial position, and {% _internal_math_placeholder 7 %} is the total number of kernels in the layer. The ordering of the kernel maps is of course arbitrary and determined bfore training beings. This sort of response normalization implements a form of lateral infibition inspired by the type found in real neurons, creating competition for big activities among neuron outputs computed using different kernels. The constants {% _internal_math_placeholder 8 %} and {% _internal_math_placeholder 9 %} are hyper-parameters whose values are determined using a validation set.

- This type of normalization is not usually seen today.

And?

- The result of this neural network structure might not be seen good today, but it was the state of art in 2012. Although it was ten years ago paper, we still can find that it has impacted a lot of paper and research in machine learning and computer vision field.

Comments